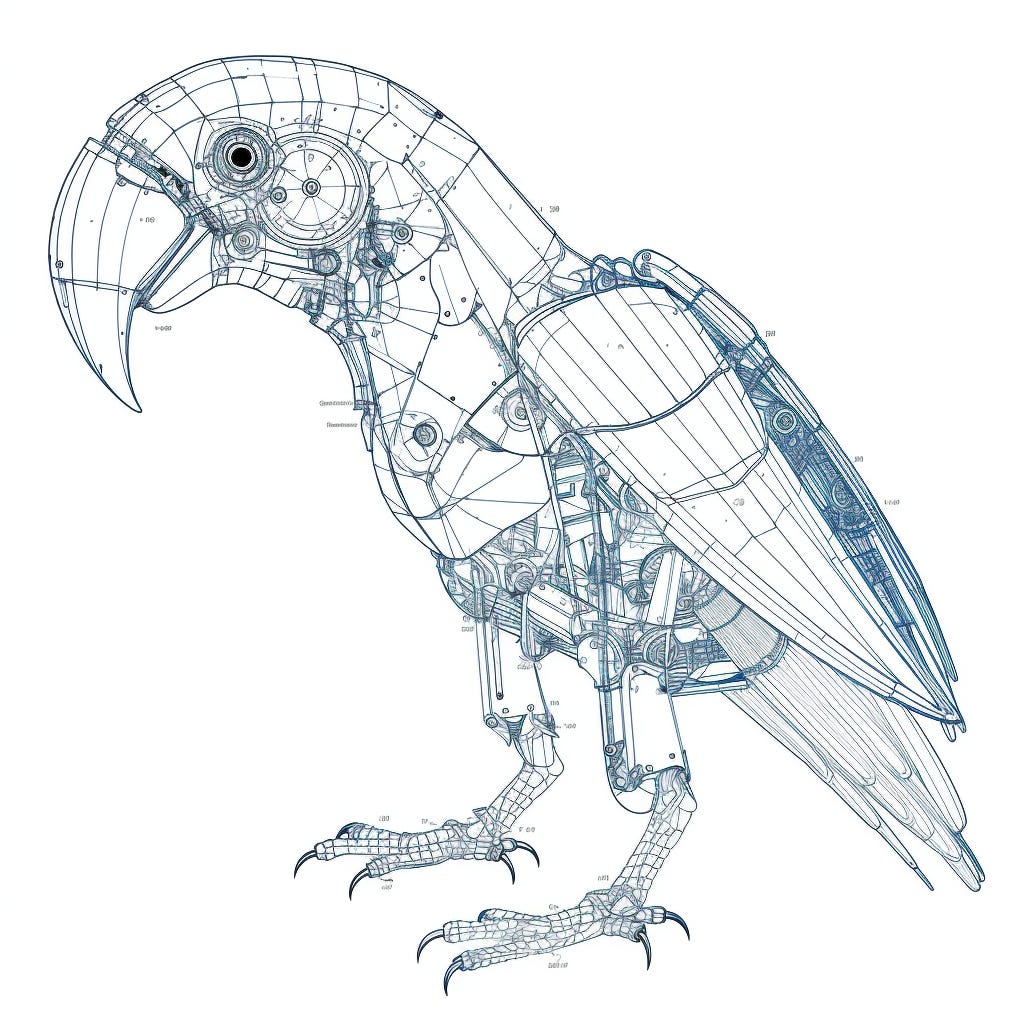

Don’t settle for a superficial understanding of how AI chatbots work

“Stochastic parrots” doesn't explain the magic

Debating whether AI chatbots “understand” things is bad philosophy. It’s as if you went to an impressive magic show and got into an argument over whether it’s “real magic” or there’s “some trick to it.” There are always tricks, but if you don’t know what they are, you haven’t solved the mystery of how it’s done.

Douglas Adams wrote a funny story about a machine that thought for a long time and answered 42, because it wasn’t a good question. To ask a good question, it can be helpful to think about what you’d learn from the answer. If you asked, “does the chatbot understand?” and God told you “yes, it understands,” or “no, it doesn’t,” what would you have learned? The mystery would still be there.

Researchers know how they built large language models, but they know hardly any of the specifics about how they decide which words to write in particular situations. (An exception: the best thing I’ve read that’s specific about how a language model works is We Found An Neuron in GPT-2. If anyone knows of other research like this, I’d love to read it.) 1

What will we get from real insight? An example from a related field was the discovery that ImageNet-trained CNNs are biased towards texture, compared to people who rely more on shape.

It will take research, not just casually trying out chatbots. The screenshots that people share show how a chatbot reacted one time, but since the output is random, you need to regenerate repeatedly to get a sense of the distribution. Such “black box” experiments will help, but I suspect really figuring it out will require some good debugging tools and techniques.

Debating whether chatbots have “world models” is similar. If they have them, we should want to know what they are and how they work. If they don’t, we should want to know what they do instead.

Since most of us aren’t AI researchers, what does “not settling” mean in practice? We should remember that there are mysteries and reject superficial explanations. Chatbot boosters will promote them and skeptics will dismiss them, but that doesn’t mean they understand how they work.

Update: apparently this subfield is called “Mechanistic Interpretability“ and if you’re interested, Neel Nanda is a researcher who has written some posts about how to get started.