Making a Pac-Man animated gif with ChatGPT's Code Interpreter

It was fun, but probably would get old fast.

ChatGPT’s Code Interpreter feature became available to paid users a few days ago. If you want to try it out, I wrote about how to get started here. Code Interpreter uses Python, so I've been asking it to create pictures by writing Python code.

After struggling to get it to extract sprites from a Space Invaders screenshot, I decided that animating just one sprite would be a better task. I choose Pac-Man since it’s easy to draw.

First attempt

To animate a Pac-Man, we need to draw it facing in four directions and with its mouth open at different angles. This took some trial and error. GPT4 knows what Pac-Man is and tries to draw it, but it got confused about the parameters to matplotlib’s patches.Wedge function:

Although it talks a good game, it seems that GPT4 often struggles with math, including basic geometry. It often gets coordinates wrong, angles backwards, and other mistakes like that. It’s very good at understanding context and coming up with likely fixes, but often only pretends to know what the bug is. This is much like programmers will often do when we try to fix a bug that we don’t really understand. Sometimes it works!

I saw some bizarre behavior. If you tell GPT4 that it’s wrong enough times, it will learn the pattern and then go into an infinite loop of correcting itself. It stopped showing me the picture and asking for feedback:

Bizarre but relatable? Here’s the transcript for that attempt. Unfortunately, it looks terrible because all the images are missing. Code Interpreter is in beta and hopefully that will be fixed.

Trying again

I started from scratch, hoping we would have better luck with a different graphics library. I know little about Python’s graphic libraries, so I asked GPT4 what’s available. Pillow sounded interesting so I went with that one. (It’s neat how AI assistance makes it easy to use a library you don’t know.)

I wondered if perhaps GPT4 being trained on other people’s ways of drawing Pac-Man was causing interference. So instead of asking it to draw Pac-Man, I asked it to draw a pie with a piece cut out. I had it draw an outline at first:

This approach worked better. GPT4 wouldn’t do exactly what I wanted, but I could ask for adjustments and it would make them easily.

I didn’t want to pretend it wasn’t a Pac-Man any longer, so I asked GPT4 what this picture reminded it of:

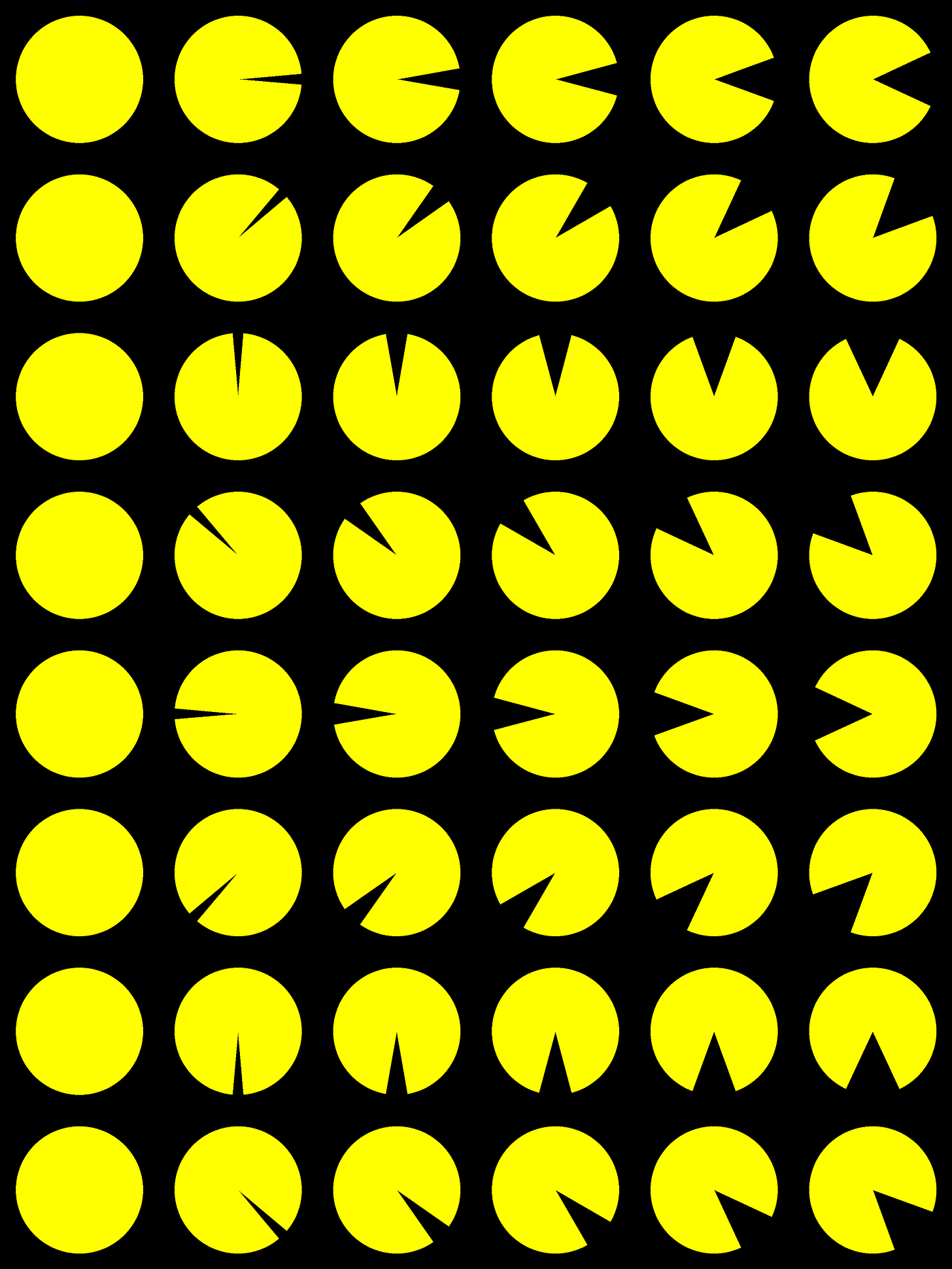

Here’s a grid showing what the draw_pacman function can do:

ChatGPT’s UI doesn’t support displaying animated gifs within the chat, but it can create them and will give you a URL to download the file. Making an animation required a lot of trial and error to get the motion right, but it was otherwise pretty straightforward. Here’s the result:

The full transcript is here. (Unfortunately, you can’t download files from a shared transcript either.)

Conclusions

GPT4 works better when you take the lead. Ask leading questions. If you know something about the technology or the problem, you can give it hints.

Code Interpreter is fun at first, but it’s too tedious to use all the time. Here is the pattern I expect: a human programmer will often start out knowing less than GPT4 about some aspects of a new task. But we learn quickly. Once you know more about a task than GPT4 does, telling it what to do becomes frustrating, sort of like a coding interview that’s not going very well. At that point, it makes more sense to do it yourself. Unfortunately, ChatGPT’s UI doesn’t support editing the code yourself, so you’re forced to be the backseat driver. I haven’t tried Copilot yet; perhaps that UI works better?

Asking GPT4 to fix a bug may work if it selects the right fix the first time, but any further attempts are likely futile. Even if it eventually worked, there is little point in getting GPT4 to learn from its mistakes since it will forget anything it learned when you start a new chat, or sometimes sooner. (Learning from our mistakes is valuable for people, but the AI’s aren’t very good at it yet.)

GPT4 has a limited context window (short-term memory) and there are more important things for it to remember than its mistakes. So, better to “rewrite history.” Edit the prompt and regenerate. A bit of “prompt engineering” can help it avoid the mistake.

Rewriting history might seem wrong. Keeping a history of mistakes made along the way would be useful for ethnomethodology and your “bloopers reel” if you want to make one. But a clean history is easier to read and gets better results from the bot. This is much like how some programmers will rewrite history to create a clean list of git commits before sending it for review.

I like to publish notebooks about how to write code for performing a task. Maybe such notebooks would read better if they were structured as a dialog between a teacher and a student? But to make it pleasant to read, I would want to edit both sides of the dialog. When GPT4 gets something a little wrong, it would be easier to fix it directly, even though the dialog would no longer be an accurate representation of GPT4’s capabilities.

Perhaps GPT4’s side of an edited dialog should be renamed to “student” or “bot,” with a note about “bot’s role originally played by GPT4.” If publishing high-quality edited dialogs became popular, they could serve as training for AI’s, and around the flywheel goes.